Hadoop Installation On Windows 7

1. Introduction

Nov 14, 2016 - Identify the yarn directories specified using the parameter yarn.nodemanager.local-dirs from /etc/gphd/hadoop/conf/yarn-site.xml on yarn node.

Hadoop version 2.2 onwards includes native support for Windows. The official Apache Hadoop releases do not include Windows binaries (yet, as of January 2014). However building a Windows package from the sources is fairly straightforward.

Hadoop is a complex system with many components. Some familiarity at a high level is helpful before attempting to build or install it or the first time. Familiarity with Java is necessary in case you need to troubleshoot.

2. Building Hadoop Core for Windows

2.1. Choose target OS version

The Hadoop developers have used Windows Server 2008 and Windows Server 2008 R2 during development and testing. Windows Vista and Windows 7 are also likely to work because of the Win32 API similarities with the respective server SKUs. We have not tested on Windows XP or any earlier versions of Windows and these are not likely to work. Any issues reported on Windows XP or earlier will be closed as Invalid.

Do not attempt to run the installation from within Cygwin. Cygwin is neither required nor supported.

2.2. Choose Java Version and set JAVA_HOME

Oracle JDK versions 1.7 and 1.6 have been tested by the Hadoop developers and are known to work.

Make sure that JAVA_HOME is set in your environment and does not contain any spaces. If your default Java installation directory has spaces then you must use the Windows 8.3 Pathname instead e.g. c:Progra~1Java.. instead of c:Program FilesJava...

2.3. Getting Hadoop sources

The current stable release as of August 2014 is 2.5. The source distribution can be retrieved from the ASF download server or using subversion or git.

From the ASF Hadoop download page or a mirror.

Subversion URL: https://svn.apache.org/repos/asf/hadoop/common/branches/branch-2.5

Git repository URL: git://git.apache.org/hadoop-common.git. After downloading the sources via git, switch to the stable 2.5 using git checkout branch-2.5, or use the appropriate branch name if you are targeting a newer version.

2.4. Installing Dependencies and Setting up Environment for Building

The BUILDING.txt file in the root of the source tree has detailed information on the list of requirements and how to install them. It also includes information on setting up the environment and a few quirks that are specific to Windows. It is strongly recommended that you read and understand it before proceeding.

2.5. A few words on Native IO support

Hadoop on Linux includes optional Native IO support. However Native IO is mandatory on Windows and without it you will not be able to get your installation working. You must follow all the instructions from BUILDING.txt to ensure that Native IO support is built correctly.

2.6. Build and Copy the Package files

To build a binary distribution run the following command from the root of the source tree.

Note that this command must be run from a Windows SDK command prompt as documented in BUILDING.txt. A successful build generates a binary hadoop .tar.gz package in hadoop-disttarget.

The Hadoop version is present in the package file name. If you are targeting a different version then the package name will be different.

2.7. Installation

Pick a target directory for installing the package. We use c:deploy as an example. Extract the tar.gz file (e.g.hadoop-2.5.0.tar.gz) under c:deploy. This will yield a directory structure like the following. If installing a multi-node cluster, then repeat this step on every node.

3. Starting a Single Node (pseudo-distributed) Cluster

This section describes the absolute minimum configuration required to start a Single Node (pseudo-distributed) cluster and also run an example MapReduce job.

3.1. Example HDFS Configuration

Before you can start the Hadoop Daemons you will need to make a few edits to configuration files. The configuration file templates will all be found in c:deployetchadoop, assuming your installation directory is c:deploy.

First edit the file hadoop-env.cmd to add the following lines near the end of the file.

80K softwares supplied. Download software download free pc dmis 2010 hasp crack. Cad/cam/cae/eda/optical crack ftp download software It is not our full software list, but you can still press Ctrl + F to search. Any you needed, just contact to. Latest cracked softwares FTP download.

Edit or create the file core-site.xml and make sure it has the following configuration key:

Edit or create the file hdfs-site.xml and add the following configuration key:

Finally, edit or create the file slaves and make sure it has the following entry:

The default configuration puts the HDFS metadata and data files under tmp on the current drive. In the above example this would be c:tmp. For your first test setup you can just leave it at the default.

3.2. Example YARN Configuration

Edit or create mapred-site.xml under %HADOOP_PREFIX%etchadoop and add the following entries, replacing %USERNAME% with your Windows user name.

Finally, edit or create yarn-site.xml and add the following entries:

3.3. Initialize Environment Variables

Run c:deployetchadoophadoop-env.cmd to setup environment variables that will be used by the startup scripts and the daemons.

3.4. Format the FileSystem

Format the filesystem with the following command:

This command will print a number of filesystem parameters. Just look for the following two strings to ensure that the format command succeeded.

3.5. Start HDFS Daemons

Run the following command to start the NameNode and DataNode on localhost.

To verify that the HDFS daemons are running, try copying a file to HDFS.

3.6. Start YARN Daemons and run a YARN job

Finally, start the YARN daemons.

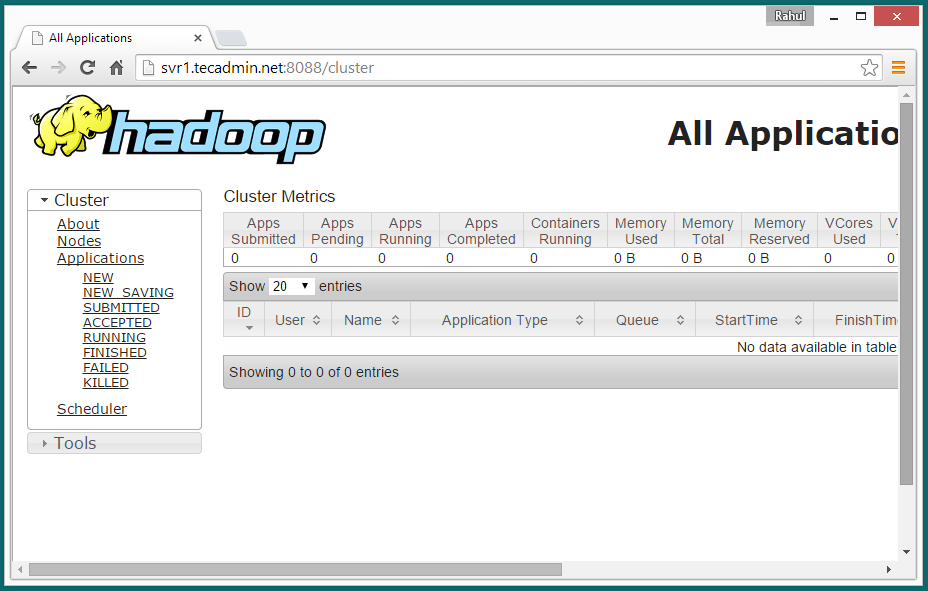

The cluster should be up and running! To verify, we can run a simple wordcount job on the text file we just copied to HDFS.

4. Multi-Node cluster

TODO: Document this

5. Conclusion

5.1. Caveats

The following features are yet to be implemented for Windows.

- Hadoop Security

- Short-circuit reads

5.2. Questions?

If you have any questions you can request help from the Hadoop mailing lists. For help with building Hadoop on Windows, send mail to common-dev@hadoop.apache.org. For all other questions send email to user@hadoop.apache.org. Subscribe/unsubscribe information is included on the linked webpage. Please note that the mailing lists are monitored by volunteers.

After extracting hadoop in my C drive I tried to execute the hadoop version command and getting below error. JAVA_HOME is set correctly in my environment variable. Can anybody help on that error ?

14 Answers

Most of the answers suggest to copy the JDK installation path. However, if you are not comfortable doing it, you can use the Windows short path to set the path name so that all applications can access the path without any hassles.

Notion to set env variable if it contains white spaces:

Just faced the same issue (Win 8.1 + Hadoop 2.7.0 [build from sources]).

The problem turned out to be (ol' good) space in path name where java is located (under C:Program Files dir). What i did was this:

1) Copy JDK dir to C:Javajdk1.8.0_40

2) edit etchadoophadoop-env.cmd and change: set JAVA_HOME=c:Javajdk1.8.0_40

3) run cmd and execute hadoop-env.cmd

4) now check 'hadoop version' whether it's still complaining (my wasn't)

Install the JDK to a folder with no spaces.Instead of C:Program FilesJavajdk1.8.x_xx, try C:javajdk1.8.x_xx.

The reason for you error is the space between the 'Program Files'. Replace it with PROGRA~1 in all the paths while configuring

Game resident evil 4 pc full rip movie. Check your JAVA_HOME.

If it is C:Program FilesJavajdk1.7.0_65. Then you will encounter such issues.Made it to C:MyDriveJavajdk1.7.0_65 and it worked out. The space in 'Program Files' creates issues.

PATH is PATH;%JAVA_HOME%bin

If you are using windows, then surely You are going to face issues like (due to X64 and X86 issues) :

FATAL datanode.DataNode: Exception in secureMain java.lang.NullPointerExceptionand

FATAL namenode.NameNode: Failed to start namenode. java.lang.UnsatisfiedLinkError: org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Ljava/lang/String;I)

Copy these files hadoop.dll, hadoop.exp, hadoop.lib, hadoop.pdb, libwinutils.lib, winutils.exe, winutils.pdb from the linkto your bin folder of hadoop installed loaction which looks like this. .HadoopInstalledhadoophadoop-2.6.0hadoop-2.6.0bin

Add your java bin location to your path environment variable.like

Hadoop Download For Windows

To get around having to install another JDK in a path that does not have a space, you can create a symbolic link with the Windows command mklink. Here's how.

- Open a cmd prompt as administrator.

- Navigate to C: if the cmd prompt does not open there.

Create the symolic link. Here, I'm setting the path that Hadoop complains about (with Program Files) to a simpler path without spaces. The /D argument means you are creating a directory symbolic link.

mklink /D java_home 'C:Program FilesJavajdk1.7.0_65'

In your hadoop-env.cmd, set your JAVA_HOME to the symbolic link you created:

set JAVA_HOME=java_home The dancing dwarf haruki murakami pdf.

This is what worked for me. More information on creating symbolic link in Windows: http://www.windows7home.net/how-to-create-symbolic-link-in-windows-7/

The solution to this problem is simple

Most of the folks will be setting the JAVA_HOME as C:Program FilesJavajdk1.8.0_121

There problem here is with the spaces and what you need to do is , copy the contentd of jdk1.8.0_121 to a folder in C Drive , say C:java

Now use this path as your JAVA_HOME

open command prompt and try this.. echo %JAVA_HOME% then check java home set or not. If not set java home.

Check here how to set JAVA_HOME in windows

I have also faced similar issue, following steps solved error for me.

Download & Install Java in c:/java/

(make sure the path is this way, if java is installed in program files, then hadoop-env.cmd will not recognize java path )

Hadoop Setup On Windows 7

Download Hadoop binary distribution.

Set Environment Variables:

Here is a GitHub link, which has winutils of some versions of Hadoop.

(if the version you are using is not in the list, the follow the conventional method for setting up Hadoop on windows - link)

If you found your version, then copy paste all content of folder into path: /bin/

Set all the .xml configuration files - Link

And Finally set JAVA_HOME path in hadoop-env.cmd file

Probably it will solve 'JAVA_HOME is incorrectly set.' error

Hope this helps.

Please add following line in hadoop-env.cmd. This should be first line in hadoop-env.cmd after comments.

This shortened Progra~1 name is SFN

This sans need for copying java to some other directory as purposed in some other answers and you can have java version which is specific for hadoop.

You can debug the hadoop.cmd file in bin folder, there may be some command which have syntax issue or improper path provided.

Open the hadoop.cmd file and see the first line '@echo off' will be there.Change '@echo off' to '@echo on' and save it. Run the 'hadoop -version' command now. It will show you for which command the 'syntax of the command is incorrect' error is coming. Correct it, if syntax problem is there or some path issue may be.

'@echo on' will help us to trace the error. The message is appearing from hadoop-config.cmd file. Pls copy the C:Program FilesJava to C:Java and change the path and try. This will work.

Just remove the bin folder in hadoop-env.cmd file(C:hadoop-2.8.0etchadoophadoop-env.cmd)'C:Javajdk-9.0.1bin' and set the path which I mentioned below. this worked for me.